| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | ||||

| 4 | 5 | 6 | 7 | 8 | 9 | 10 |

| 11 | 12 | 13 | 14 | 15 | 16 | 17 |

| 18 | 19 | 20 | 21 | 22 | 23 | 24 |

| 25 | 26 | 27 | 28 | 29 | 30 | 31 |

- deep daiv. week4 팀활동과제

- deep daiv. project_paper

- deep daiv. week3 팀활동과제

- deep daiv. WIL

- deep daiv. 2주차 팀 활동 과제

- Today

- Total

OK ROCK

[NLP] A Free Format Legal Question Answering System, Khazaeli et al., 2021 본문

[NLP] A Free Format Legal Question Answering System, Khazaeli et al., 2021

서졍 2023. 10. 3. 13:15A Free Format Legal Question Answering System

Soha Khazaeli, Janardhana Punuru, Chad Morris, Sanjay Sharma, Bert Staub, Michael Cole, Sunny Chiu-Webster, Dhruv Sakalley. Proceedings of the Natural Legal Language Processing Workshop 2021. 2021.

aclanthology.org

Abstract

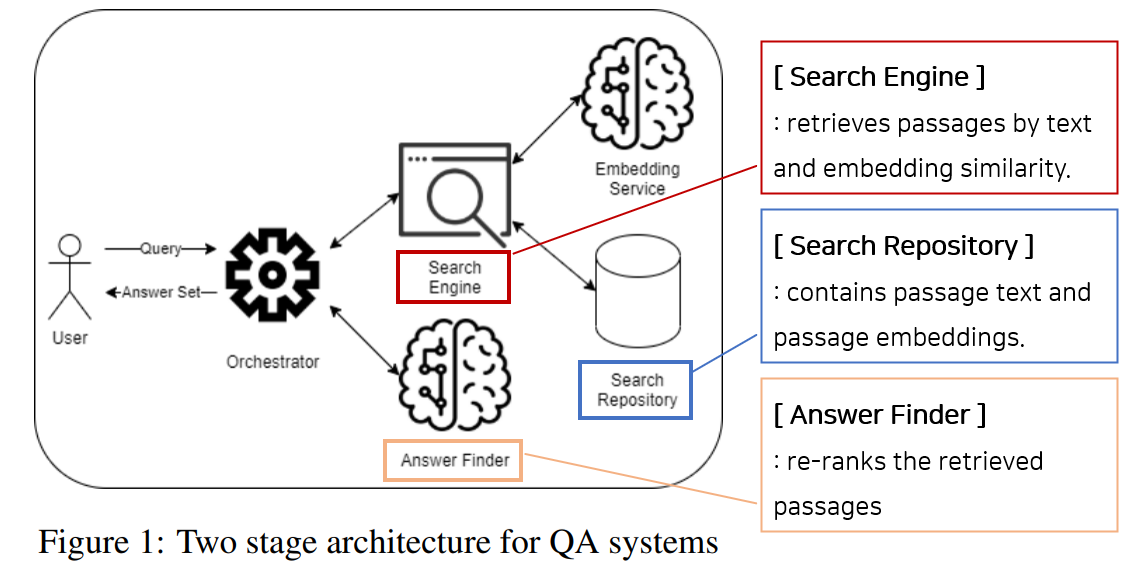

Infomation Retrieval-based question answering system

- Input : predefined set of questions/patterns + sparse vector search(such as BM25), embeddings

- Output : BERT-based answer re-ranking system

1. Introduction

(1) Factoid(사실 기반) questions

- e.g) "What is the burden of proof for breach of contract?"

- Short answer can satisfy.

(2) Non-Factoid questions

- e.g) "Why does child support increase with income?"

- Open-ended and adequate answer needed.

[ A Legal Domain QA system ]

▷ should provide complete multiple sentence answers with context

▷ must also handle questions where no single answer exists.

▷ The best answers can depend on the lawyer's perstpective.

In this paper, we present a retrieval-based legal QA system to provide useful answers for all legal practice areas.

2. Related Work

(1) Recent QA research : Retrieve and Read Paradigm

1. Retrieval Step : selects candidate documents

2. Reading Step: find answers.

-> In this paper, we adopted similar approach. (Two Step Architecture)

(2) Standard Retrieval Methods

1. Sparse Vector Space : (e.g) TF-IDF, BM25

2. Dense Vector Space: (e.g) LSA, GLoVE, Semantic Embedding

(3) ConLIEE(Competition on Legal Imformation Extraction/Entailment) task

1. Answering yes/no legal questions

2. Retrieve Gemanelegal documents

▶ In contrast to the previous work(handle a limited range of questions),

this system is desinged to answer almost all legal content questions without legal practice area restrictions.

3. Methodology

The system selects answers by re-Ranking search using both sparse vector techniques(BM25) & dense vector approach(semantic embedding).

3.1. Retrieving Passages

Search Engine & Search Repository

Goal: retrieving relevant passsages

must detect sufficient context in the passage.

→ using both sparse vector and semantic embedding passage representations.

- QBD(Query By Document method, Yang et al., 2018) + implemented with BM25 (for sparse vector passage representation retrieval)

- Legal GloE and Legal Siamese BERT(Reimers and Gurevych, 2019) embeddings for dense embedding enrichment.

더보기Legal Siamese BERT- Objective : to retrieve similar passages in vector space.- the most similar headnote using BM25 is identified as a positive similar passage.

- Five random headnotes are added as negative instances.

▷ The system was trained using a regression objective function with cosine loss.

▷ Input sentence embedding uses Legal BERT base model with mean pooling of token embeddings.

3.2. Answer Finder

Answer Finder

Goal: accepts a question passage pair and computes the probability the passage answers to the question.

- BERT sequence binary classifier(Devlin et al., 2019) is trained on question-answer pairs.

- uses [CLS] representation with two fully connected layers with a final softmax layer.

- Answer Finder's Input is the concatenation of question(Q) and passage(P). = "[CLS]<Q>[SEP]<P>[SEP]"

- Answer Finder is trained by fine tuning Legal BERT.

- fine-tuned in two stages,...

4. Results

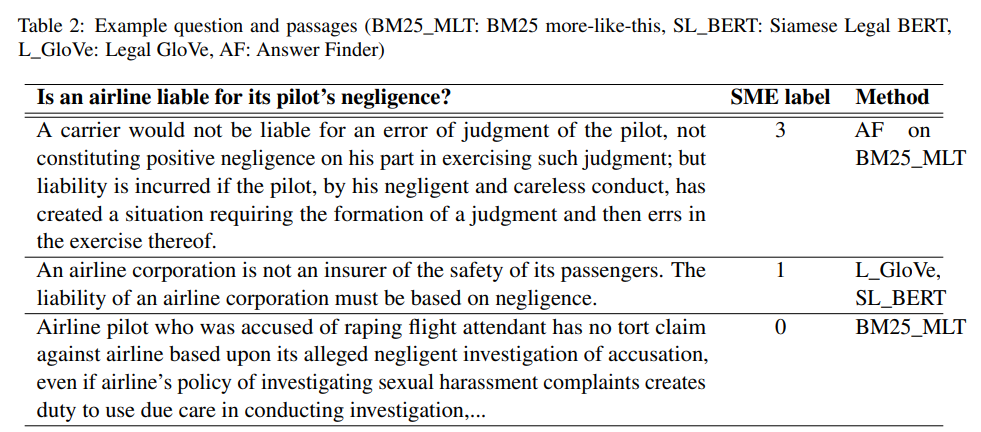

Table 2 shows retrieved passage, system answers, and the SME evaluation for "Is an airline libable for its pilot's negligence?"

[약간의 해석]

BM2_MLT picked a long passage with multiple occureces of 'airline', 'pilot', 'liable' and 'negligence'.

Legal GloVe and Legal Siamese BERT picked a semantically-similar short passage even though 'pilot' does not appear.

▷Metrics

1. Classifier Performance metrics = F1, Accuracy

2. Ranked serach results Evaluation metrics = DCG(Discounted Cumulative Gain) , MRR(Mean Reciprocal Rank)